Understanding Windows Server Hyper-V Cluster Configuration, Performance and Security

The Windows Server Failover Cluster in the context of Hyper-V, allows bringing together multiple physical Hyper-V hosts into a “cluster” of hosts. This allows aggregating CPU/memory resources attached to shared storage which in turn allows the ability to easily migrate virtual machines between the Hyper-V hosts in the cluster. The shared storage can be in the form of the traditional SAN or in the form of Storage Spaces Direct in Windows Server 2016.

The ability to easily migrate virtual machines between shared storage allows restarting a virtual machine on a different host in the Windows Server Failover Cluster if the original physical host the virtual machine was running on fails. This allows business-critical workloads to be brought back up very quickly even if a host in the cluster has failed.

Windows Server Failover Clustering also has other added benefits as they relate to Hyper-V workloads that are important to consider. In addition to allowing virtual machines to be highly available when hosts fail, the Windows Server Failover Cluster also allows for planned maintenance periods such as patching Hyper-V hosts.

This allows Hyper-V administrators the ability to patch hosts by migrating virtual machines off a host, applying patches, and then rehydrating the host with virtual machines. There is also Cluster Aware Updating that allows this to be done in an automated fashion. Windows Server Failover Clustering also provides the benefit of protecting against corruption if the cluster hosts become separated from one another in the classic “split-brain” scenario. If two hosts attempt to write data to the same virtual disk, corruption can occur.

Windows Server Failover Clusters have a mechanism called quorum that prevents separated Hyper-V hosts in the cluster from inadvertently corrupting data. In Windows Server 2016, new type of quorum has been introduced that can be utilized along with the longstanding quorum mechanisms – the cloud witness.

It is also included in the Standard Edition version of Windows Server along with the Datacenter version. There is no feature difference between the two Windows versions in the Failover Clustering features and functionality. A Windows Server Failover Cluster is compromised of two or more nodes that offer resources to the cluster as a whole. A maximum of 64 nodes per cluster is allowed with Windows Server 2016 Failover Clusters.

Additionally, Windows Server 2016 Failover Clusters can run a total of 8000 virtual machines per cluster. Although in this post we are referencing Hyper-V in general, Windows Server Failover Clusters can house many different types of services including file servers, print servers, DHCP, Exchange, and SQL just to name a few.

One of the primary benefits as already mentioned with Windows Server Failover Clusters is the ability to prevent corruption when cluster nodes become isolated from the rest of the cluster. Cluster nodes communicate via the cluster network to determine if the rest of the cluster is reachable. This is extremely important as it checks to see if the rest of the cluster is reachable. The cluster in general then performs a voting process of sorts that determines which cluster nodes have the node majority or can reach the majority of the cluster resources.

Quorum is the mechanism that validates which cluster nodes have the majority of resources and have the winning vote when it comes to assuming ownership of resources such as in the event of a Hyper-V cluster and virtual machine data.

This becomes glaringly important when you think about the case of an even node cluster such as a cluster with (4) nodes. If a network split happens that allows two of the nodes on each side to only see its neighbor, there would be no majority. Starting with Windows Server 2012, by default each node has a vote in the quorum voting process.

A file or share witness allows a tie breaking vote by allowing one side of the partitioned cluster to claim this resource, thus breaking the tie. The cluster hosts that claim the disk or file share witness perform a SCSI lock on the resource, which prevents the other side from obtaining the majority quorum vote. With odd numbered cluster configurations, one side of a partitioned cluster will always have a majority so the file or share witness is not needed.

Quorum received enhancements in Windows Server 2016 with the addition of the cloud witness. This allows using an Azure storage account and its reachability as the witness vote. A “0-byte” blob file is created in the Azure storage account for each cluster that utilizes the account.

Windows Server Failover Clusters Hyper-V Specific Considerations

- Cluster Shared Volumes

- ReFS

- Storage Spaces Direct

Cluster Shared Volumes

Cluster Shared Volumes or CSVs provide specific benefits for Hyper-V virtual machines in allowing more than one Hyper-V host to have read/write access to the volume or LUN where virtual machines are stored. In legacy versions of Hyper-V before CSVs were implemented, only one Windows Server Failover Cluster host could have read/write access to a specific volume at a time. This created complexities when thinking about high availability and other mechanisms that are crucial to running business-critical virtual machines on a Windows Server Failover Cluster.

Cluster Shared Volumes solved this problem by allowing multiple nodes in a failover cluster to simultaneously have read/write access to the same LUN provisioned with NTFS. This allows the advantage of having all Hyper-V hosts provisioned to the various storage LUNs which can then assume compute/memory quickly in the case of a node failure in the Windows Server Failover Cluster.

ReFS

ReFS is short for “Resilient File System” and is the newest file system released from Microsoft speculated to be the replacement for NTFS by many. ReFS touts many advantages when thinking about Hyper-V environments. It is resilient by nature, meaning there is no chkdsk functionality as errors are corrected on the fly.

However, one of the most powerful features of ReFS related to Hyper-V is the block cloning technology. With block cloning the file system merely changes metadata as opposed to moving actual blocks. This means that will typical I/O intensive operations on NTFS such as zeroing out a disk as well as creating and merging checkpoints, the operation is almost instantaneous with ReFS.

ReFS should not be used with SAN/NFS configurations however as the storage operates in I/O redirected mode in this configuration where all I/O is sent to the coordinator node which can lead to severe performance issues. ReFS is recommended however with Storage Spaces Direct which does not see the performance hit that SAN/NFS configurations do with the utilization of RDMA network adapters.

Storage Spaces Direct

Storage Spaces Direct is Microsoft’s software defined storage solution that allows creating shared storage by using locally attached drives on the Windows Server Failover Cluster nodes. It was introduced with Windows Server 2016 and allows two configurations:

- Converged

- Hyper-converged

With Storage Spaces Direct you have the ability to utilize caching, storage tiers, and erasure coding to create hardware abstracted storage constructs that allow running Hyper-V virtual machines with scale and performance more cheaply and efficiently than using traditional SAN storage.

- Use Hyper-V Core installations

- Sizing the Hyper-V Environment Correctly

- Network Teaming and Configuration

- Storage Configuration

- Operating System Patches Uniformity

The above configuration areas represent a large portion of potential Hyper-V configuration mistakes that many make in production environments. Let’s take a closer look at the above in more detail to explain why they are extremely important to get right in a production environment and what can be done to ensure you do get them right.

Use Hyper-V Core installations

Using the Windows Server 2016 core installation to run the Hyper-V role is certainly the recommended approach to run production workloads on Hyper-V nodes. With the wide range of management tools that can be leveraged with Hyper-V core such as PowerShell remoting, as well as running the GUI Hyper-V manager on another server, it really presents no additional administrative burden to run Hyper-V core with today’s tools.

Sizing the Hyper-V Environment Correctly

A great tool that can be utilized to correctly size the needed number of cores, memory, and disk space is the Microsoft Assessment and Planning Toolkit. It can calculate the current cores, memory, and storage being utilized by production workloads in an automated fashion so you can easily gather current workload demands. Then, you can calculate for growth in the environment based on the projected amount of new server resources that will need to be provisioned in the upcoming future.

The Microsoft Assessment and Planning Toolkit can be downloaded here: https://www.microsoft.com/en-us/download/details.aspx?id=7826

Network Teaming and Configuration

- To ensure network quality of service

- To provide network redundancy

- To isolate traffic to defined networks

- Where applicable, take advantage of Server Message Block (SMB) Multichannel

Proper design of network connections for redundancy generally involves teaming connections together. There are certainly major mistakes that can be made with the Network Teaming configuration that can lead to major problems when either hardware fails or a failover occurs. When cabling and designing network connections on Hyper-V hosts, you want to make sure that the cabling and network adapter connections are “X’ed” out, meaning that there is no single point of failure with the network path. The whole reason that you want to team network adapters is so that if you have a failure with one network card, the other “part” of the team (the other network card) will still be functioning.

Mistakes however can be made when setting up network teams in Hyper-V cluster configurations. A common mistake is to team ports off the same network controller. This issue does not present itself until a hardware failure of the network controller takes both ports from the same network controller offline.

Also, if using different makes/models of network controllers in a physical Hyper-V host, it is not best practice to create a team between those different models of network controllers. There can potentially be issues with the different controllers and how they handle the network traffic with the team. You always want to use the same type of network controller in a team.

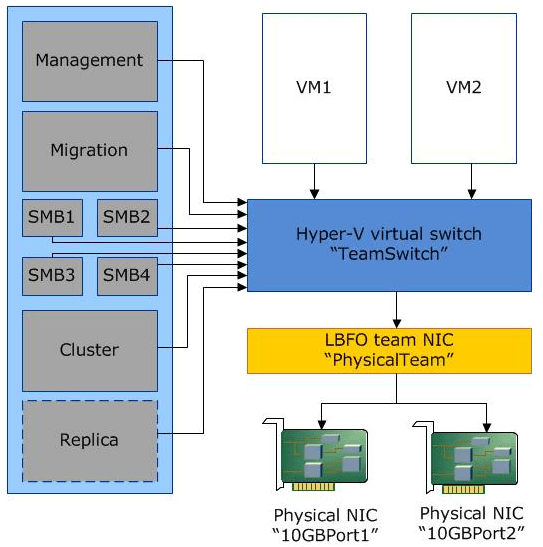

Properly setting up your network adapters for redundancy and combining available controller ports together can bring many advantages such as being able to make use of “converged networking”. Converged networking with Hyper-V is made possible by combining extremely fast NICs (generally higher than 10 Gbps) and “virtually” splitting traffic on your physical networks inside the hypervisor. So, the same network adapters are used for different kinds of traffic.

Storage Configuration

Aside from the performance benefits that MPIO brings, it also enables redundancy in that a path may go down between the Hyper-V host and the storage system, and the virtual machine stays online. The Multipath I/O that is what MPIO stands for allows for extremely performant and redundant storage paths to service Hyper-V workloads.

As an example, to enable multipath support in Hyper-V for iSCSI storage, run the following command on your Hyper-V host(s):

- Enable-MSDSMAutomaticClaim -BusType iSCSI

To enable round-robin on the paths:

- Set-MSDSMGlobalDefaultLoadBalancePolicy -Policy RR

Set the best-practice disk timeout to 60 seconds:

- Set-MPIOSetting -NewDiskTimeout 60

Another best practice to keep in mind as relates to storage is always consult your specific vendor when it comes to the Windows storage setting values. This ensures performance is tweaked according to their specific requirements.

- Physical NIC considerations

- Firmware and drivers

- Addressing

- Enable Virtual Machine Queue or VMQ

- Jumbo frames

- Create redundant paths

- Windows and Virtual Network considerations

- Created Dedicated Networks for traffic types

- Use NIC teaming except on iSCSI network use MPIO

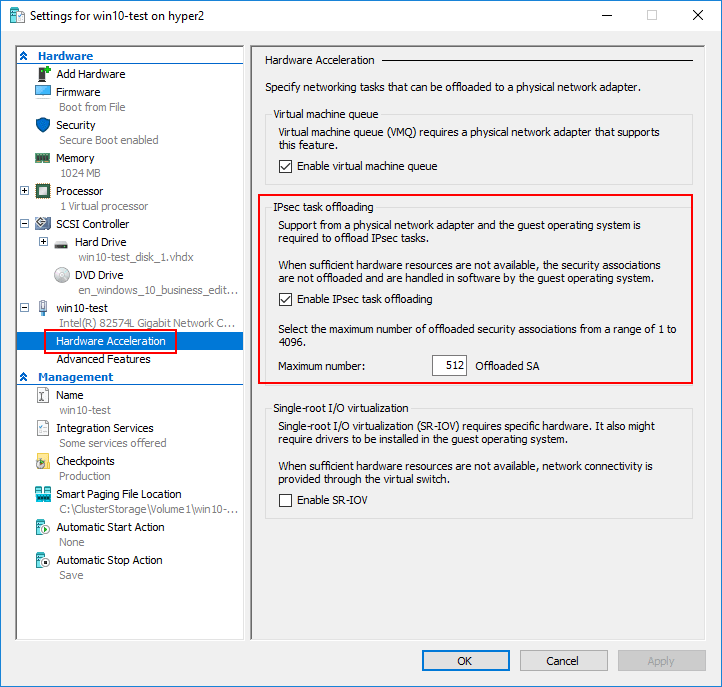

- Disable TCP Chimney Offloading, and IPsec Offloading

- Uncheck management traffic on dedicated Virtual Machine virtual switches

When it comes to IP addressing schemes, it goes without saying, never use DHCP for addressing the underlying network layer in a Hyper-V environment. Using automatic addressing schemes can lead to communication issues down the road. A good practice is to design out your IP addresses, subnets, VLANs, and any other network constructs before setting up your Hyper-V host or cluster. Putting forethought into the process helps to ensure there are no issues with overlapping IPs, subnets, etc when it comes down to implementing the design. Statically assign addresses to your host or hosts in a cluster.

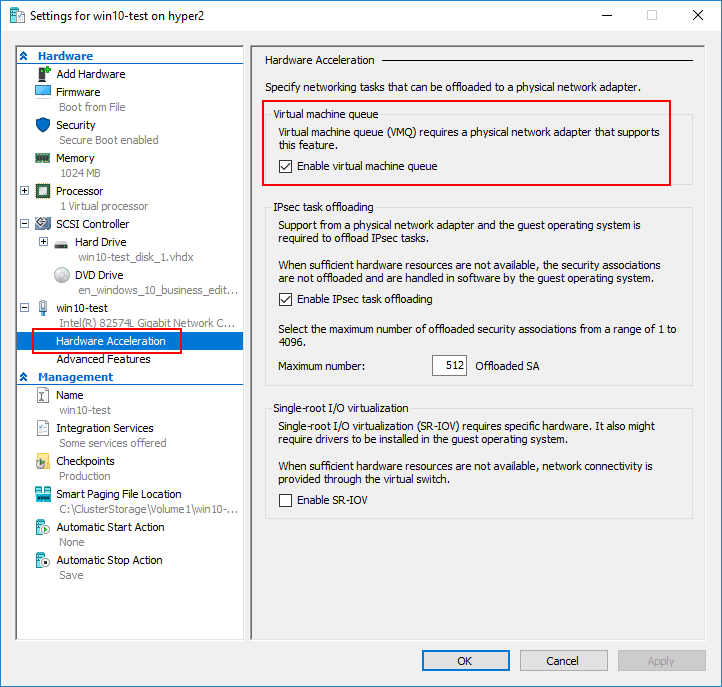

Today’s modern physical NICs inherently have features that dramatically improve performance, especially in virtualized environments. One such technology is Virtual Machine Queue or VMQ enabled NICs. VMQ enables many hardware virtualization benefits that allow much more efficient network connectivity for TCP/IP, iSCSI, and FCoE. If your physical NICs support VMQ, make sure to enable it.

Use jumbo frames with iSCSI, Live Migration, and Clustered Shared Volumes or CSV networks. Jumbo frames are defined as any Ethernet frame that is larger than 1500 bytes. Typically, in a virtualization environment, jumbo frames will be set to a frame size of 9000 bytes or a little larger. This may depend on the hardware you are using such as the network switch connecting devices. By making use of jumbo frames, traffic throughput can be significantly increased with lower CPU cycles. This allows for a much more efficient transmission of frames for the generally high traffic communication of iSCSI, Live Migration, and CSV networks.

Another key consideration when thinking about the physical network cabling of your Hyper-V host/cluster is to always have redundant paths so that there is no single point of failure. This is accomplished by using multiple NICs cabled to multiple physical switches which creates redundant paths. This ensures that if one link goes down, critical connected networks such as an ISCSI network still have a connected path.

- CSV or Heartbeat

- iSCSI

- Live Migration

- Management

- Virtual Machine Network

Creating dedicated networks for each type of network communication allows segregating the various types of traffic and is best practice from both a security and performance standpoint. There are various ways of doing this as well. Traffic can either be segregated by using multiple physical NICs or by aggregated multiple NICs and using VLANs to segregate the traffic.

As mentioned in the physical NIC considerations, having redundant paths enables high availability. By teaming NICs you are able to take advantage of both increased performance and high availability. A NIC team creates a single “virtual” NIC that Windows is able to utilize as if it were a single NIC. However, it contains multiple physical NICs in the underlying construct of the connection. If one NIC is disconnected, the “team” is still able to operate with the other connected NIC. However, with iSCSI connections, we don’t want to use NIC teaming, but rather Multipath I/O or MPIO. NIC Teams provide increased performance for unique traffic flows and does not improve throughput of a single traffic flow as seen with iSCSI. With MPIO, iSCSI traffic is able to take advantage of all the underlying NIC connections for the flows between the hosts and the iSCSI target(s).

Do not use TCP Chimney Offloading or IPsec Offloading with Windows Server 2016. These technologies have been deprecated in Windows Server 2016 and can impact server and networking performance. To disable TCP Chimney Offload, from an elevated command prompt run the following commands:

- Netsh int tcp show global – This shows the current TCP settings

- netsh int tcp set global chimney=disabled – Disables TCP Chimney Offload, if enabled

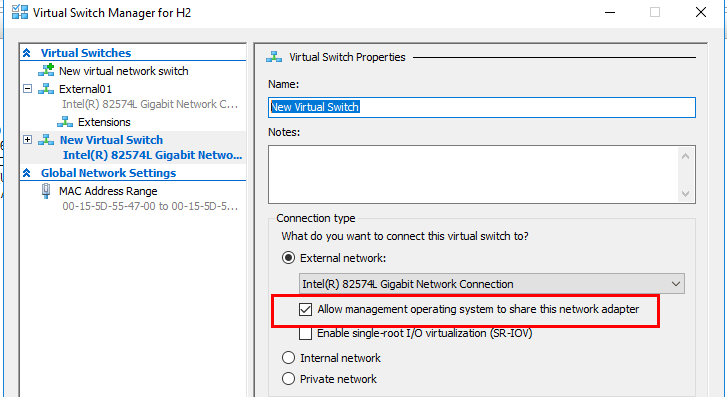

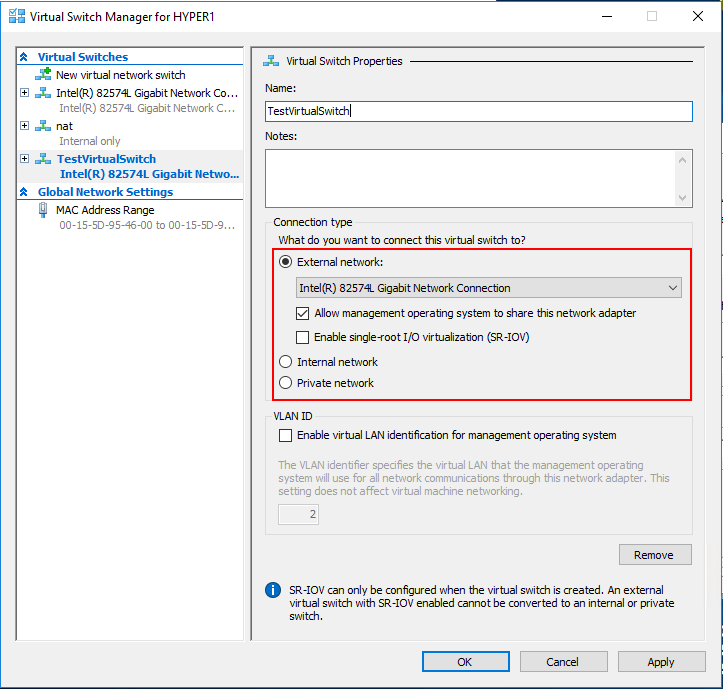

Hyper-V allows the ability to enable management traffic on new virtual switches created. It is best practice to have the management traffic isolated to a dedicated virtual switch and uncheck “allow management operating system to share this network adapter” on any dedicated virtual machine virtual switch.

In addition to traditional networking in the true sense, Hyper-V virtual switches also allow for and provide policy enforcement for security, isolating resources, and ensuring SLAs. These additional features are powerful tools that allow today’s often multi-tenant environments to have the ability to isolate workloads as well as provide traffic shaping. This also assists in protecting against malicious virtual machines.

The Hyper-V virtual switch is highly extensible. Using the Network Device Interface Specification or NDIS filters as well as Windows Filtering Platform or WFP, Hyper-V virtual switches can be extended by plugins written specifically to interact with the Hyper-V virtual switch. These are called Virtual Switch Extensions and can provide enhanced networking and security capabilities.

- ARP/ND Poisoning (spoofing) protection – A common method of attack that can be used by a threat actor on the network is MAC spoofing. This allows an attacker to appear to be coming from a source illegitimately. Hyper-V virtual switches prevent this type of behavior by providing MAC address spoofing protection

- DHCP Guard protection – With DHCP guard, Hyper-V is able to protect against a rogue VM being using for a DHCP server which helps to prevent man-in-the-middle attacks

- Port ACLs – Port ACLS allow administrators to filter traffic based on MAC or IP addresses or ranges which allows effectively setting up network isolation and microsegmentation

- VLAN trunks to VM – Allows Hyper-V administrators to direct specific VLAN traffic to a specific VM

- Traffic monitoring – Administrators can view traffic that is traversing a Hyper-V virtual switch

- Private VLANs – Private VLANs can effectively microsegment traffic as it is basically a VLAN within a VLAN. VMs can be allowed or prevented from communicating with other VMs within the private VLAN construct

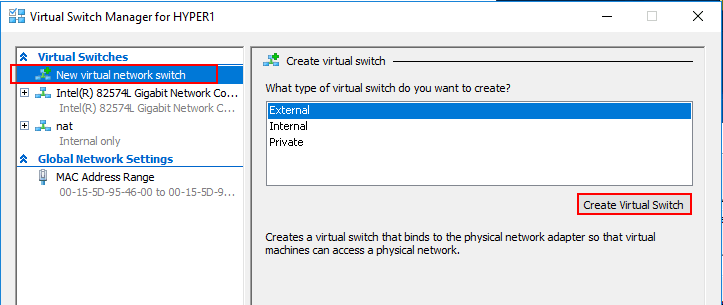

There are three different connectivity configurations for the Hyper-V Virtual Switch that can be configured in Hyper-V. They are:

- Private Virtual Switch

- Internal Virtual Switch

- External Virtual Switch

Private Virtual Switch

With the Private Virtual Switch, the virtual switch only allows communications between the connected virtual machines that are connected to the private virtual switch.

Internal Virtual Switch

With the Internal Virtual Switch, it only allows communication between virtual adapters connected to connected VMs and the management operating system.

External Virtual Switch

External Virtual Switches allows communication between virtual adapters connected to virtual machines and the management operating system. It utilizes the connected physical adapters to the physical switch for communicating externally.

With the external virtual switch, virtual machines can be connected to the outside world without any additional routing mechanism in place. However, with both private and internal switches, there must be some type of routing functionality that allows getting traffic from the internal/private virtual switches to the outside. The primary use case of the internal and private switches is to isolate and secure traffic. When connected to these types of virtual switches, traffic is isolated to only those virtual machines connected to the virtual switch.

This is similar in feel and function for VMware administrators who have experience with the distributed virtual switch. The configuration for the distributed virtual switch is stored at the vCenter Server level. The configuration is then deployed from vCenter to each host rather than from the host side.

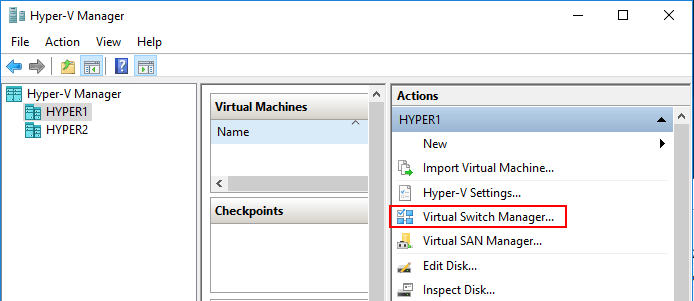

Creating a new virtual network switch in the Hyper-V Manager Virtual Switch Manager for Hyper-V.

Looking at the Hyper-V virtual switch properties, you can set the Connection type as well as the VLAN ID for the new Hyper-V virtual switch.

Creating Hyper-V Virtual Switches with PowerShell

Using PowerShell for virtual switch creation is a great way to achieve automation in a Hyper-V environment. PowerShell makes it easy to create new Hyper-V virtual switches in just a few simple one-liner cmdlets.

- Get-NetAdapter – make note of the names and network adapters

- External Switch – New-VMSwitch -name

-NetAdapterName -AllowManagementOS $true - Internal Switch – New-VMSwitch -name

-SwitchType Internal - Private Switch – New-VMSwitch -name

-SwitchType Private

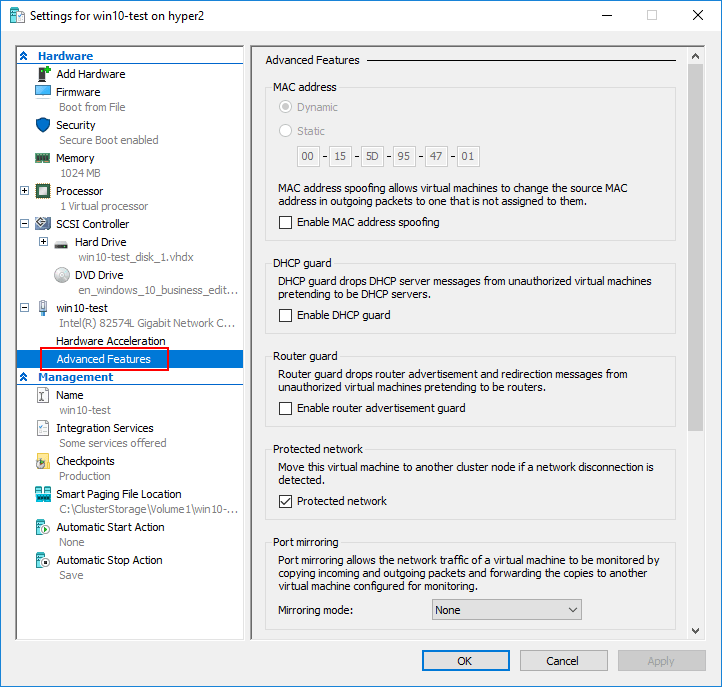

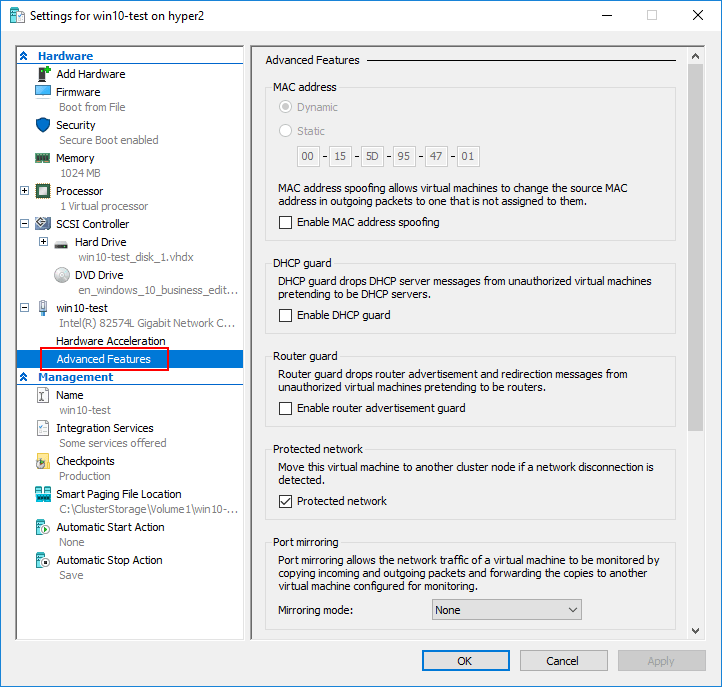

While not directly related to the Hyper-V virtual switch configuration, the virtual machine level Advanced Features include several very powerful network features made possible by the Hyper-V virtual switch including:

- DHCP guard – Protects against rogue DHCP servers

- Router guard – Protects against rogue routers

- Protected network – A high availability mechanism that ensures a virtual machine is not disconnected from the network due to a failure on a Hyper-V host

- Port Mirroring – Allows monitoring traffic

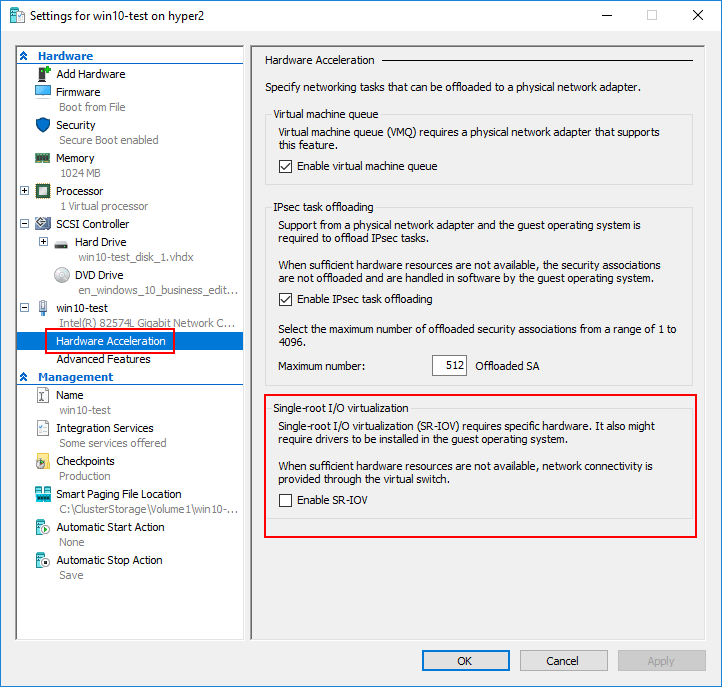

- Virtual machine queue

- IPsec Task offload

- SR-IOV

- DHCP Guard, Router Guard, Protected Network, and Port Mirroring

Let’s take a look at these different Hyper-V advanced network settings configuration and how they can be used and implemented in an organization’s Hyper-V infrastructure.

Note: There have been known issues with certain network cards, such as Broadcom branded cards, where Virtual Machine Queue being enabled actually has the opposite effect. This seems to have been an issue with earlier versions of Hyper-V and have since been overcome with later Hyper-V releases and firmware updates from network card manufacturers.

Disabling or Enabling VMQ at the virtual switch level, can be accomplished with the Set-VMNetworkAdapter PowerShell cmdlet:

- Set-VMNetworkAdapter –ManagementOS -Name

-VmqWeight 0

You can set the number of maximum number of security associations that can be offloaded to the physical adapter in PowerShell:

- Set-VMNetworkAdapter -IPsecOffloadMaximumSecurityAssociation

The DHCP guard feature is a great way to ensure that a virtual machine is not enabled as a DHCP server accidentally or intentionally without authorization. When the DHCP guard feature is turned on, the Hyper-V host drops DHCP server messages from unauthorized virtual machines that are attempting to act as a DHCP server on the network.

With the Router guard feature, the Hyper-V host prevents virtual machines from advertising themselves on the network as a router and possibly causing routing loops or wreaking other havoc on the network.

Protected Network is a great feature for high availability. When set, this feature proactively moves the virtual machine to another cluster node if a network disconnection condition is detected on the virtual machine. This is enabled by default.

Port mirroring is a great way to either troubleshoot a network issue or perhaps perform security reconnaissance on the network. It typically mirrors traffic from one “port” to another “port” allowing a TAP device to be installed on the mirrored port to record all network traffic. With the Port mirroring virtual machine setting, Hyper-V administrators can mirror the network traffic of a virtual machine to another virtual machine that has monitoring utilities installed.

SANs that are enabled with iSCSI present storage targets to clients who are initiators. In a virtualization environment, the clients or initiators are the hypervisor hosts. The targets are the LUNs that are presented to the hypervisor hosts for storage. The iSCSI LUNs act as if they are local storage to the hypervisor host.

- Multiple Hyper-V Hosts – Configure at least two Hyper-V hosts in a cluster with three or more being recommended for increased HA

- Redundant network cards – Have two network cards dedicated to iSCSI traffic

- Redundant Ethernet switches – Two Ethernet switches dedicated to iSCSI traffic. Cabling from redundant network cards should be “X-ed” out with no one single path of failure to storage from each Hyper-V host

- SAN with redundant controllers – A SAN with multiple controllers (most enterprise ready SANs today are configured with at least (2) controllers). This protects against failures caused by a failed storage controller. When one fails, it “fails over” to the secondary controller

With Windows Server 2016, the network convergence model allows aggregating various types of network traffic across the same physical hardware. The Hyper-V networks suited for the network convergence model include the management, cluster, Live Migration, and VM networks. However, iSCSI storage traffic needs to be separated from the other types of network traffic found in the network convergence model as per Microsoft best practice regarding storage traffic.

With network configuration, you will want to configure your two network adapters for iSCSI traffic with unique IPs that will communicate with the storage controller(s) on the SAN. There are a few other considerations when configuring the iSCSI network cards on the Hyper-V host including:

- Use Jumbo frames where possible – Jumbo frames allow a larger data size to be transmitted before the packet is fragmented. This generally increases performance for iSCSI traffic and lowers the CPU overhead for the Hyper-V hosts. However, it does require that all network hardware used in the storage area network is capable of utilizing jumbo frames

- Use MPIO – MPIO or Multipath I/O is used with accessing storage rather than using port aggregation technology such as LACP on the switch or Switch Embedded Teaming for network convergence. Link aggregation technologies such as LACP only improve the throughput of multiple I/O flows coming from different sources. Since the flows for iSCSI will not appear to be unique, it will not improve the performance of iSCSI traffic. MPIO on the other hand works from the perspective of the initiator and target so can improve the performance of iSCSI

- Use dedicated iSCSI networks – While part of the appeal of iSCSI is the fact that it can run alongside other types of network traffic, for the most performance, running iSCSI storage traffic on dedicated switch fabric is certainly best practice

On the storage side of things, if your SAN supports Offloaded Data Transfer or ODX, this can greatly increase storage performance as well. Microsoft’s Offloaded Data Transfer is also called copy offload and enables direct data transfers within or between a storage device(s) without involving the host. Comparatively, without ODX, the data is read from the source and transferred across the network to the host. Then the host transfers the data back over the network to the destination. The ODX transfer, again, eliminates the host as the middle party and significantly improves the performance of copying data. This also lowers the host CPU utilization since the host no longer has to process this traffic. Network bandwidth is also saved since the network is no longer needed to copy the data back and forth.

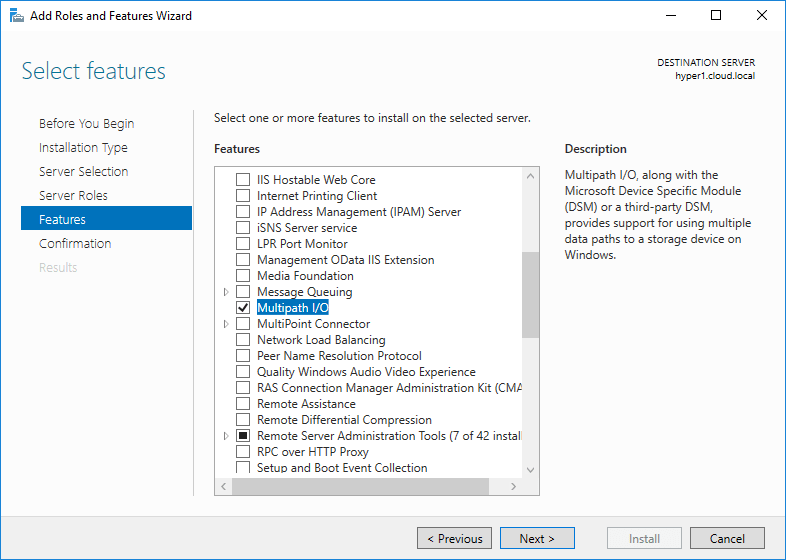

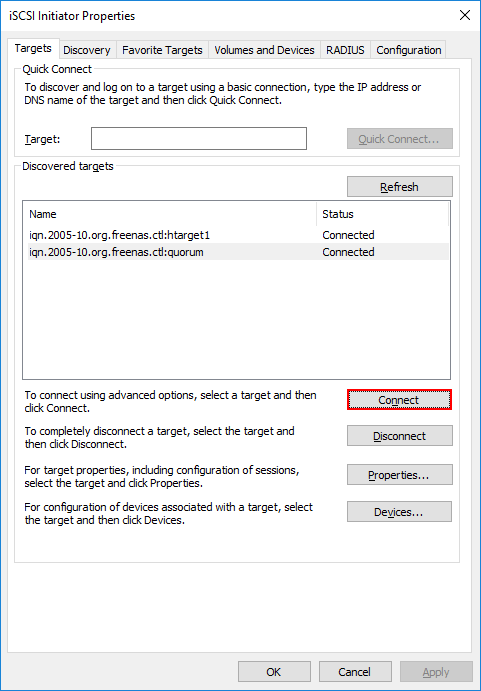

The installation of MPIO in Windows Server 2016 installs quickly but will require a reboot.

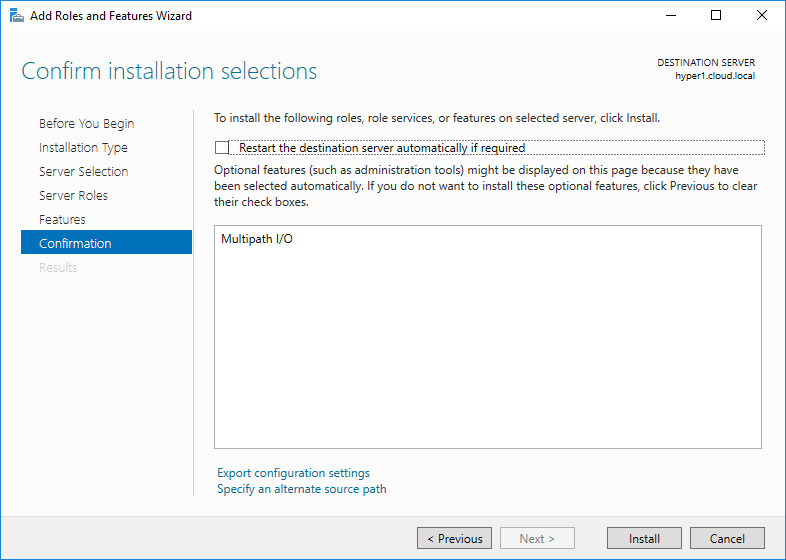

Once MPIO is installed and the server has been rebooted, you can now configure MPIO for iSCSI connections.

- Launch the MPIO utility by typing mpiocpl at a run menu. This will launch the MPIO Properties configuration dialog

- Under the Discover Multi-Paths tab, check the Add support for iSCSI devices check box

- Click OK

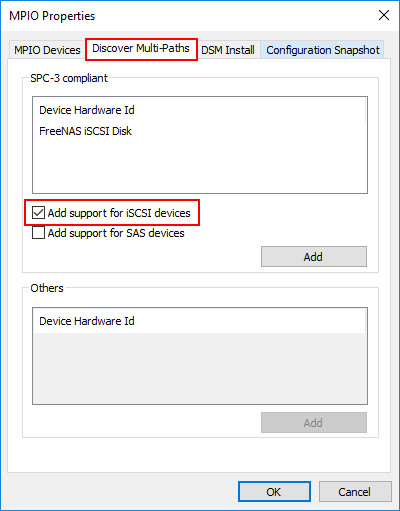

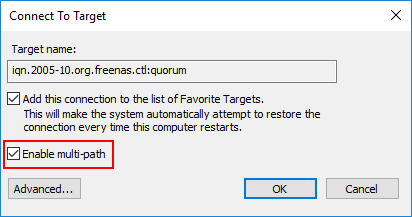

Under the iSCSI configuration, launched by typing iscsicpl, you can Connect to an iSCSI target

The Connect to Target dialog box allows selecting the Enable multi-path checkbox to enable multipathing for iSCSI targets.

You will need to do this for every volume the Hyper-V host is connected to. Additionally, in a configuration where you have two IP addresses bound to two different network cards in your Hyper-V server and two available IPs for the iSCSI targets on your SAN, you would create paths for each IP address connected to the respective IP address of the iSCSI targets. This will create an iSCSI network configuration that is not only fault tolerant but also able to use all available connections for maximum performance.

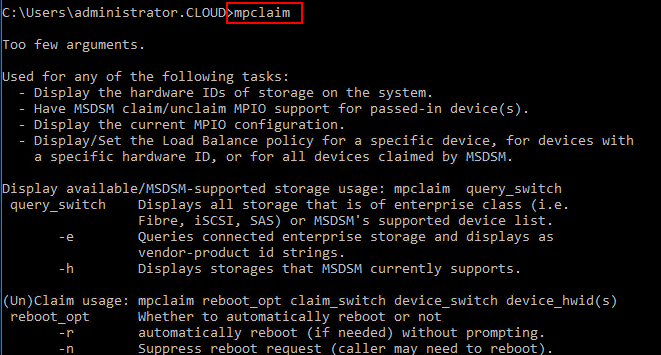

Launch mpclaim from the command line to see the various options available.

To check your current policy for your iSCSI volumes:

- mpclaim -s -d

To verify paths to a specific device number:

- mpclaim -s -d

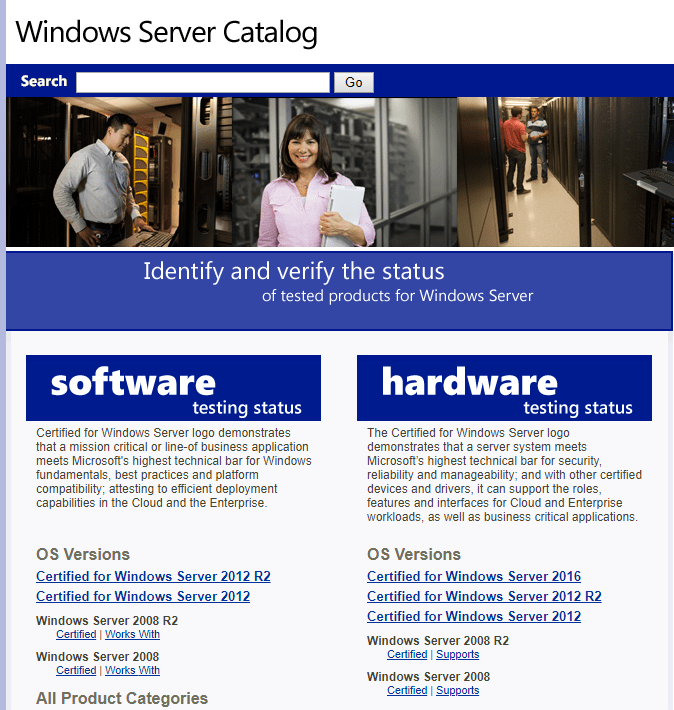

Windows Server 2016 Certified

Hardware needs to be certified to run with Microsoft Windows Server 2016. A great resource for testing whether or not specific hardware is tested and approved for use with Microsoft Windows Server 2016 is the Windows Server Catalog.

- https://www.windowsservercatalog.com/

Physical Servers

- Storage Spaces Direct needs a minimum of (2) servers and can contain a maximum of (16) servers

- It is best practice to use the same make/model of servers

CPU

- Intel/AMD procs – Nehalem/EPYC or newer

Memory

- You need enough memory for Windows Server 2016 itself

- Recommended (4) GB of memory for every 1 TB of cache drive capacity on each server

Boot

- Any Windows supported boot device

- RAID 1 mirror for boot drive is supported

- 200 GB minimum boot drive size

Networking

- (1) 10 Gbps network adapter per server

- Recommended at least 2 NICs for redundancy

- A (2) server configuration supports a “switchless” configuration with a direct cable connection

Drives

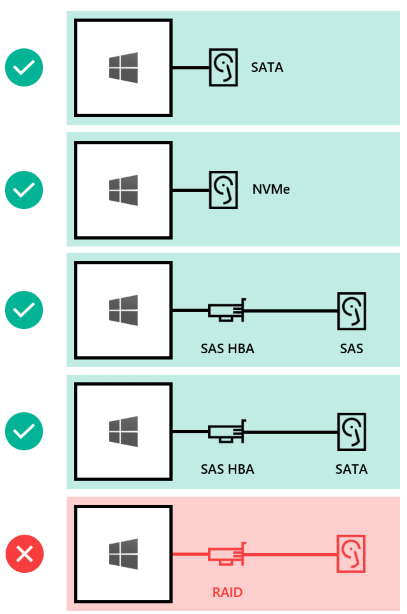

- SATA, SAS, and NVMe supported

- 512n, 512e, and 4K native drives all supported

- SSDs need to have power loss protection

- Direct attached SATA drives supported

- Direct attached NVMe drives

- It is not supported to have a RAID controller card or SAN storage. HBA cards must be configured in pass-through mode

Windows Server 2016 Operating System Requirements

- Windows Server 2016 Datacenter License is required for Storage Spaces Direct

As far as fault tolerance, if you have three or more nodes in your cluster, Storage Spaces Direct is resilient to up to two drive losses or losing two hosts. With two-node clusters, the hit on disk space is quite high since the cluster utilizes a two-way mirroring mechanism for fault tolerance. This means you essentially lose 50% of your disk capacity.

When utilizing 4 node clusters and larger, you can take advantage of erasure coding similar to RAID 5 which is much more efficient from a capacity standpoint (60-80%). However, this erasure coding is heavier on writes. Microsoft has worked on this problem with Multi Resilient Volumes (MRVs) using ReFS. This creates a three-way mirror with erasure coding that acts as a sort of a write cache that works extremely well with Hyper-V virtual machines. Data is safely stored on three different drives on different servers.

SAN based storage is tried and proven and most vendors today have Hyper-V compatible solutions. Storage Spaces technology has been in existence since Windows Server 2012 and not before. Don’t think that just because you are using Windows Server 2016 Hyper-V that you must use Storage Spaces Direct. Often times, procedures and processes are in place that organizations have used successfully with SAN storage in their virtualized environments that work very well and efficiently. If you are comfortable with these process, procedures, and vendor solutions in your environment, this can certainly be a major reason to stick with SAN storage for Windows Server 2016 Hyper-V.

Storage Spaces Direct is also a great solution and often is much cheaper than high end SAN storages from various vendors. Additionally, the storage provisioning and management becomes part of the Windows Server 2016 operating system and not a separate entity that must be managed with disparate tooling and vendor utilizes. Storage Spaces Direct can be fully managed and configured from within PowerShell which is certainly a trusted and powerful solution that is baked into today’s Windows Server OS.

One of the reasons you might pick SAN storage over Storage Spaces Direct today is if you have the need for deduplication. Currently, Storage Spaces Direct does not support deduplication or other more advanced storage features and capabilities that you may get with third-party vendor SAN storage. There is no doubt that in future versions of Storage Spaces Direct, deduplication features will be included into the solution as well as other expanded storage functionality.

Performance for many is a driving factor when it comes to choosing a storage solution. Can Storage Spaces Direct perform adequately for running production workloads? A published article from Microsoft shows Storage Spaces Direct enabled servers providing 60GB/sec in aggregate throughput using (4) Dell PowerEdge R730XD servers. The solution can certainly perform and perform well!

- Supports 64 TB virtual hard disk size

- Improved logging mechanisms in VHDX

- Automatic disk alignment

- Dynamic resizing

- Virtual disk sharing

New disk sizes

The 64 TB virtual hard disk size certain opens up some pretty interesting use cases. However, for most, there will be no disk that will not fall within the boundaries of this new disk size and most will not even come close to this new configuration maximum. This also will negate the need to perform pass-through storage provisioning if this was necessary for size reasons.

Improved Logging

With the improved logging features that are contained within the VHDX virtual disk metadata, the VHDX virtual disk is further protected from corruption that could happen due to unexpected power failure or power loss. This also opens up the possibility to store custom metadata about a file. Users may want to capture notes about the specific VHDX file such as the operating system contained or patches that have been applied.

Automatic Disk Alignment

Aligning the virtual hard disk format to the disk sector size provides performance improvements. VHDX files automatically align to the physical disk structure. VHDX files also leverage larger block sizes for both the dynamic and differencing disk formats. This greatly improves the performance of dynamic sized VHDX files, making the difference in performance negligible between fixed and dynamic. The dynamic sizing option is the option that is preferred when creating VHDX files.

Shared VHDX

There is a new option starting in Windows Server 2012 to share virtual VHDX hard disks between virtual machines. Why would you do this? Guest clustering is an interesting option to run a clustered Windows Server configuration on top of a physical Hyper-V cluster to allow application high availability on top of virtual machine high availability. If a virtual machine fails, you still suffer the downtime it takes to restart the virtual machine on another Hyper-V host. When running a cluster inside a Hyper-V cluster, when one virtual machine fails, the second VM in the cluster assumes the role of servicing the application. A shared VHDX allows utilizing a VHDX virtual disk as a shared cluster disk between guest cluster nodes.

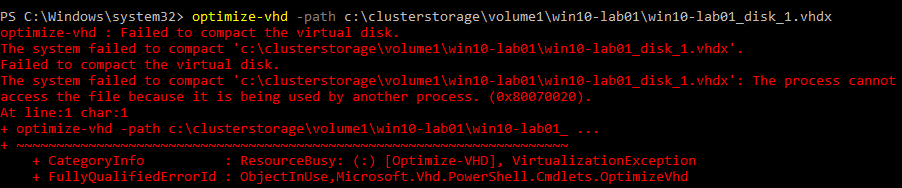

The optimize-vhd operation can only be performed with the virtual hard disk detached or attached in read-only mode if the virtual machine is running. If the disk is not attached properly for the operation specified or in use, you will see the following:

PowerShell options available with the optimize-vhd cmdlet:

- Optimize-vhd -Path

-Mode Full – This option runs the compact operation in Full mode which scans for zero blocks and reclaims unused blocks. This is only allowed if the virtual hard disk is mounted in read only mode - Optimize-vhd -Path

-Mode Pretrimmed – Performs the same as Quick mode but does not require the hard disk to be mounted in read only mode - Optimize-vhd -Path

-Mode Quick – The virtual hard disk is mounted in read-only and reclaims unused blocks but does not scan for zero blocks - Optimize-vhd -Path

-Mode Retrim – Sends retrims without scanning for zero blocks or reclaiming unused blocks - Optimize-vhd -Path

-Mode Prezeroed – performs as Quick mode but does not require the virtual disk to be read only. The unused space detection will be less effective than the read only scan. This is useful if a tol has been run to zero all the free space on the virtual disk as this mode then can reclaim the space for subsequent block allocations

What are the requirements for resizing VHDX files?

- Must be VHDX, this is not available for VHD files

- Must be attached to SCSI controller

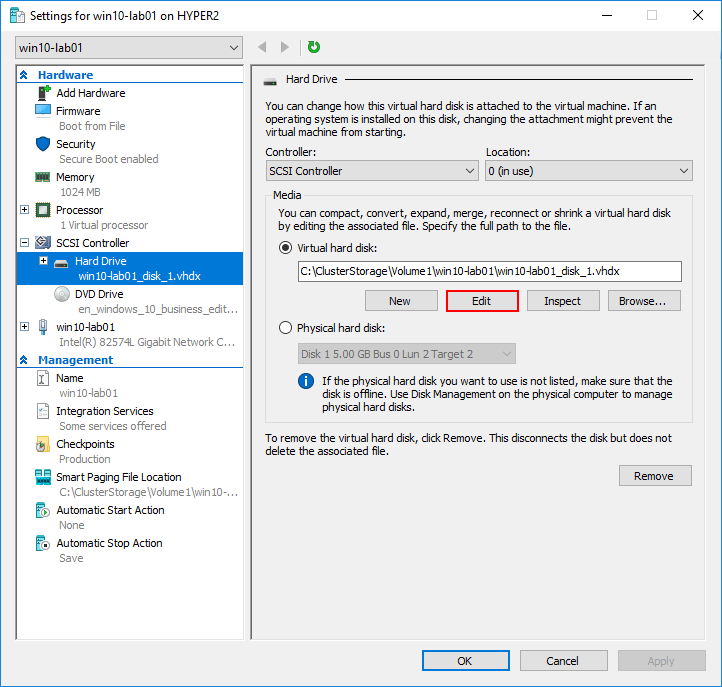

This can be done via the GUI with Hyper-V manager or using PowerShell. Choose the Edit option for the virtual disk file and then you can choose to Expand, Shrink, or Compact.

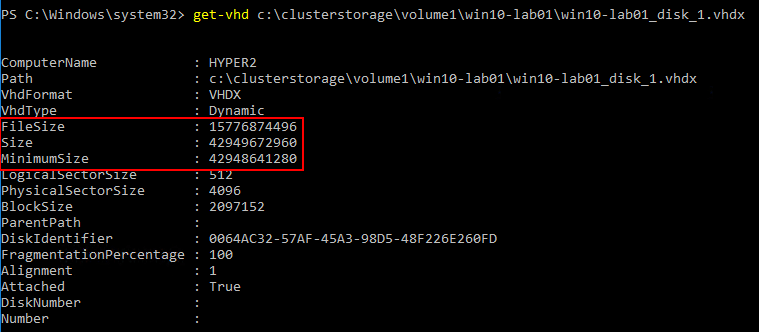

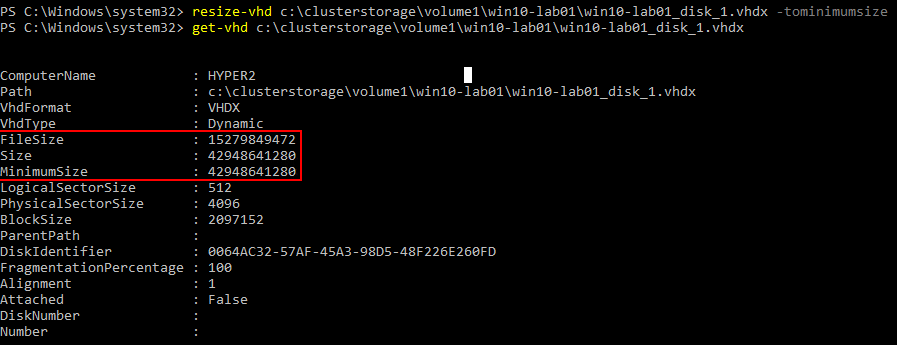

With PowerShell, you run the Resize-vhd cmdlet to resize. You can easily see the information regarding the virtual hard disk with the get-vhd cmdlet.

Below we are using the resize-vhd cmdlet to resize the file to the minimum size. You can see the file size has indeed changed when comparing the above cmdlet return for information compared to the below returned file size information. The minimumsize parameter will resize the VHDX to the smallest possible size. Again, this can be done while the virtual machine is powered on.

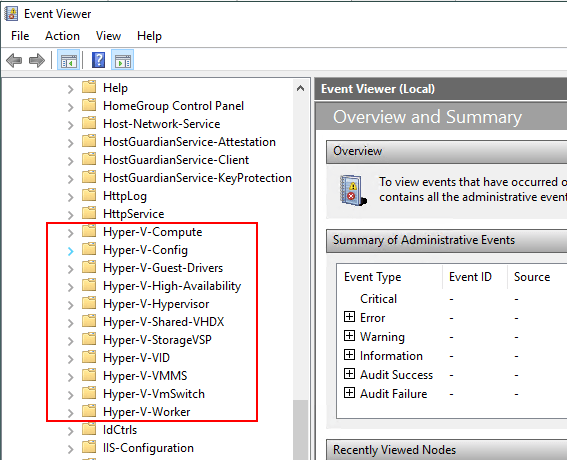

- Hyper-V-Compute – Captures information regarding the container management API known as the Host Compute Service (HCS) and serves as a low-level management API

- Hyper-V-Config – Captures events related to the virtual machine configuration files. Errors that involve virtual machine configuration files either missing, corrupt, or otherwise inaccessible will be logged here

- Hyper-V-Guest-Drivers – Log file that contains information regarding the Hyper-V integration services components and provides valuable information in regards to troubleshooting issues with the integration components

- Hyper-V-High-Availability – Events related to Hyper-V Windows Server Failover Clusters

- Hyper-V-Hypervisor – Events related to the Hyper-V hypervisor itself. If Hyper-V fails to start, look here. Also, informational messages such as Hyper-V partitions created or deleted will be logged here

- Hyper-V-Shared-VHDX – Information specific to shared VHDX virtual disks between virtual machines are found in this log

- Hyper-V-StorageVSP – Captures information regarding the Storage Virtualization Service Provider. This contains low-level troubleshooting information for virtual machine storage

- Hyper-V-VID – Logs events from the Virtualization Infrastructure Driver regarding memory assignment, dynamic memory, or changing static memory with a running virtual machine

- Hyper-V-VMMS – Virtual Machine Management Service events which are valuable in troubleshooting a virtual machine that won’t start or a failed Live Migration operation

- Hyper-V-VmSwitch – Contains events from the virtual network switches

- Hyper-V-Worker – the log that captures Hyper-V worker process information which is responsible for the actual running of the virtual machine

To find the various Hyper-V specific events logs in the Windows Event Viewer, navigate to Windows Logs >> Applications and Services Logs >> Microsoft >> Windows

There are a couple of steps to take advantage of the PowerShell module from GitHub. First you need to download and import the PowerShell module, then you reproduce the issue which should capture the relevant information in the logs.

Below is a synopsis of the steps found here: https://blogs.technet.microsoft.com/virtualization/2017/10/27/a-great-way-to-collect-logs-for-troubleshooting/

Download the PowerShell module and import it

# Download the current module from GitHub

Invoke-WebRequest “https://github.com/MicrosoftDocs/Virtualization-Documentation/raw/live/hyperv-tools/HyperVLogs/HyperVLogs.psm1” -OutFile “HyperVLogs.psm1”

# Import the module

Import-Module .\HyperVLogs.psm1

Reproduce the Issue and Capture the Logs

# Enable Hyper-V event channels to assist in troubleshooting

Enable-EventChannels -HyperVChannels VMMS, Config, Worker, Compute, VID

# Capture the current time to a variable

$startTime = [System.DateTime]::Now

# Reproduce the issue here

# Write events that happened after “startTime” for the defined channels to a named directory

Save-EventChannels -HyperVChannels VMMS, Config, Worker, Compute, VID -StartTime $startTime

# Disable the analytical and operational logs — by default admin logs are left enabled

Disable-EventChannels -HyperVChannels VMMS, Config, Worker, Compute, VID

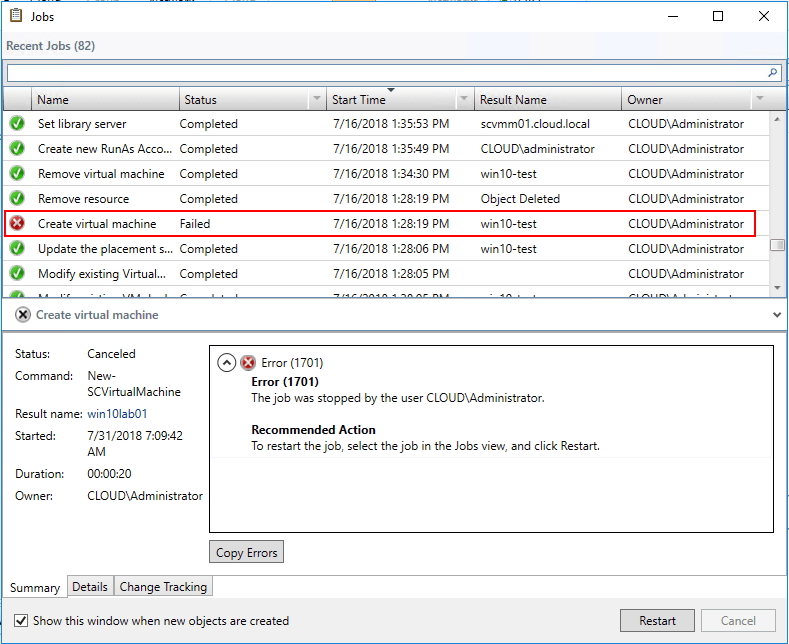

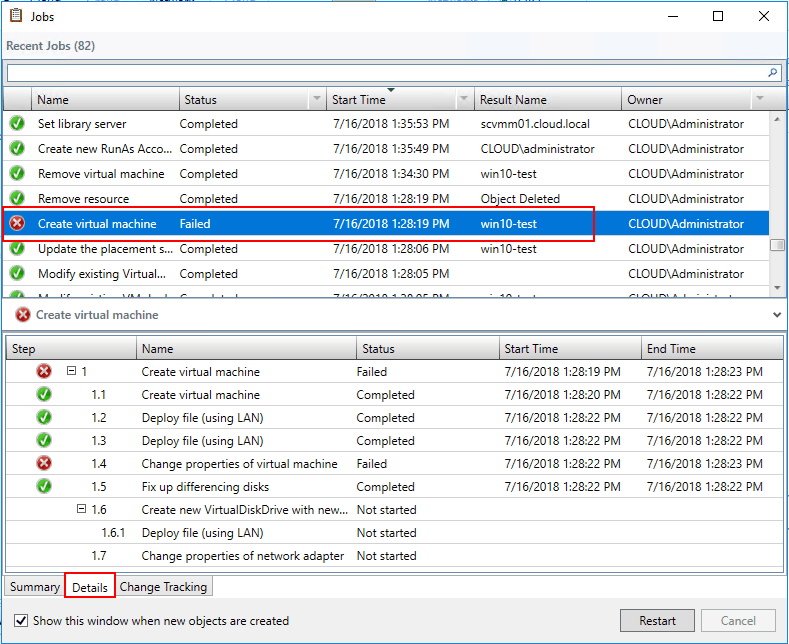

Below, a failed create virtual machine task shows the status of Failed. What caused the job to fail? The Details view allows digging further.

On the Details tab, System Center Virtual Machine Manager provides a detailed step-by-step overview of all the steps involved in the particular task executed in SCVMM. Note, below, how SCVMM enumerates the individual steps, and shows the exact point the task presented with a failure – “change properties of virtual machine”. This extremely helpful when you are looking to detail exactly what is causing a global task to fail.

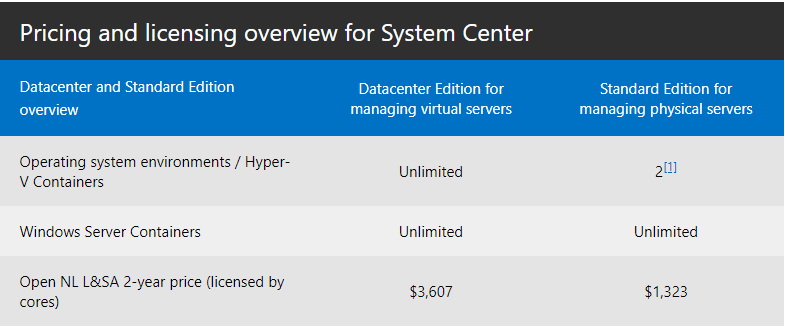

With System Center Virtual Machine Manager, all of these various features and functionality and much more are brought under a single pane-of-glass dashboard and allows for consolidated management of the entire Hyper-V infrastructure. System Center 2016 brings to the mix cloud support, enabling seamless management of complex hybrid cloud environments with both on-prem and Azure public cloud workloads.

As mentioned, System Center Virtual Machine Manager is a pay for product and is licensed and integrated along with the System Center family of products. You cannot license System Center Virtual Machine Manager as a standalone product. For further details on buying/licensing System Center, take a look at the following link:

- Windows PowerShell – Windows PowerShell is the premier scripting language for use by Windows Server administrators today. SCVMM allows IT administrator to take advantage of the fully scriptable capabilities of SCVMM and run scripts against multiple VMs.

- Integrated Physical to Virtual (P2V) Conversions – Most organizations today are looking to virtualize physical resources if they still have physical servers around. SCVMM allows easily performing P2V operations

- Virtual Machines Intelligent Placement – SCVMM allows automatically profiling Hyper-V hosts in the environment and placing VMs on the host that has the best fit for hosting those resources

- Centralized Resource Management and Optimization – One of the primary advantages of SCVMM is the centralized management it offers. Hyper-V administrators have all the tools and management for Hyper-V hosts and clusters in a single location

- Virtual Machine Rapid Deployment and Migration – SCVMM allows creating virtual machine templates that allow rapidly deploying virtual machines from master VM templates. Service templates allow creating complete groups of virtual machines and deploys them together as a single object that provides an application(s)

- Centralized Resource Library – This component of SCVMM allows building a library of all resources required to build virtual machines, including ISO images, scripts, profiles, guest operating system profiles, and virtual machine templates. The Centralized Library facilitates the rapid deployment of virtual machines

- Centralized Monitoring and Reporting – Centralized monitoring and reporting of the entire Hyper-V infrastructure allows administrators to quickly and easily monitor the environment

- Self-service Provisioning – SCVMM administrators can delegate controlled access to end users for specific virtual machines, templates, and other resources through a web-based portal. This is especially powerful in DEV/TEST where developers may need to quickly provision new VMs for themselves according to the controls

- Existing SAN Networks – SCVMM allows taking advantage of existing SAN networks for use in the environment. SCVMM can automatically detect and use existing SAN infrastructure to transfer virtual machine files

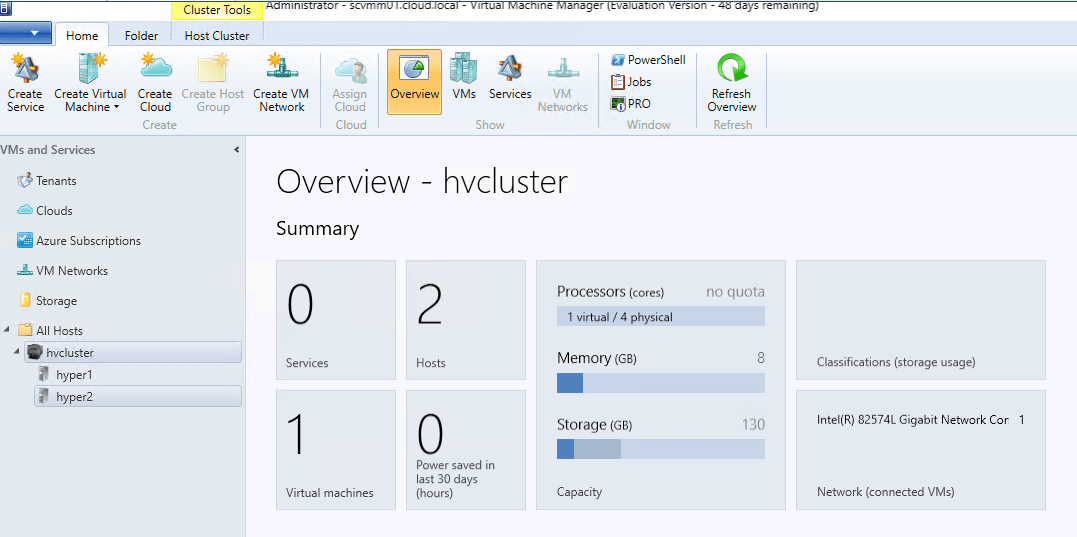

System Center Virtual Machine Manager allows seeing an overview of current performance not only across Hyper-V hosts, but also Hyper-V clusters.

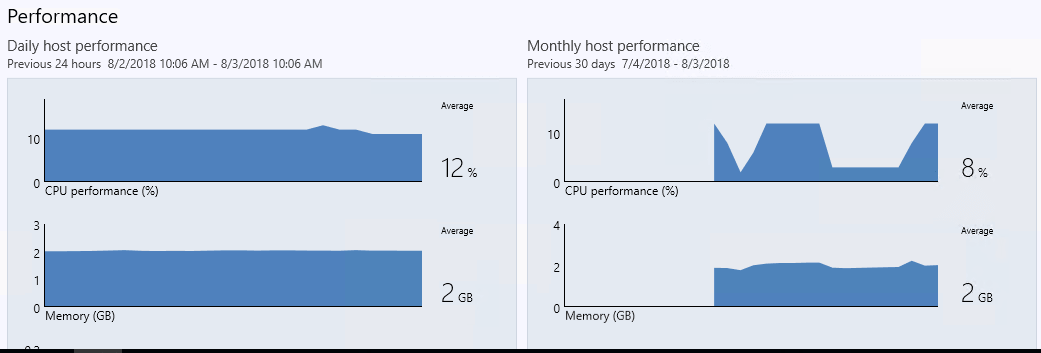

A deeper look at Performance, including Daily host performance and Monthly host performance metrics.

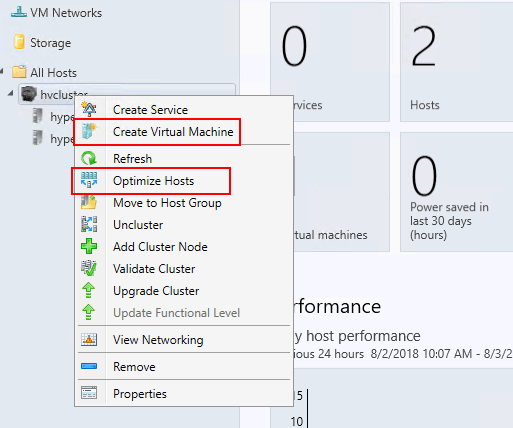

Using System Center Virtual Machine Manager, you can easily create High Availability virtual machines. Additionally, hosts can easily be optimized.

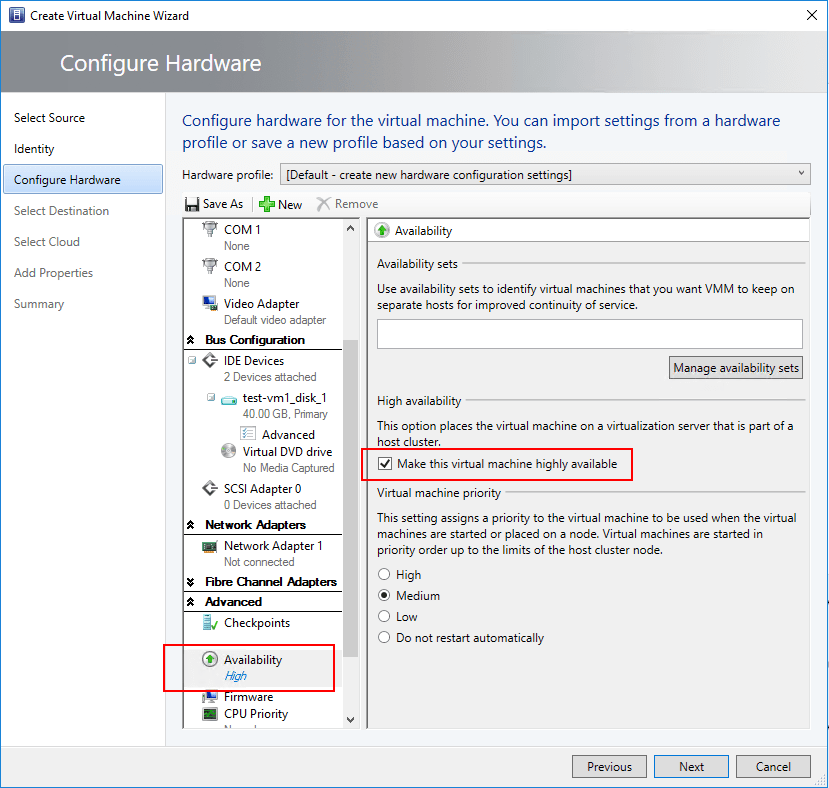

In the Create Virtual Machine Wizard, SCVMM allows configuring the Availability of the virtual machine that is housed on a Hyper-V cluster as well as the Start priority.

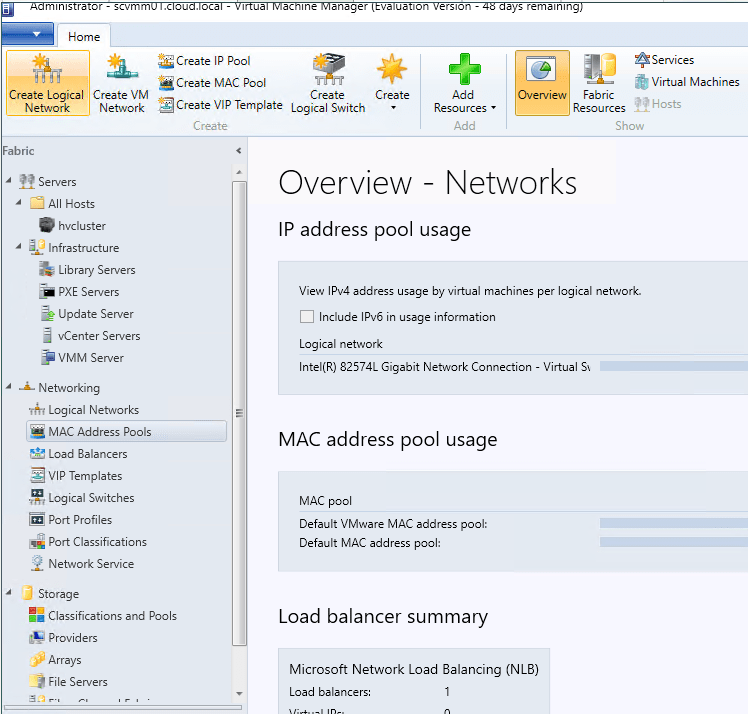

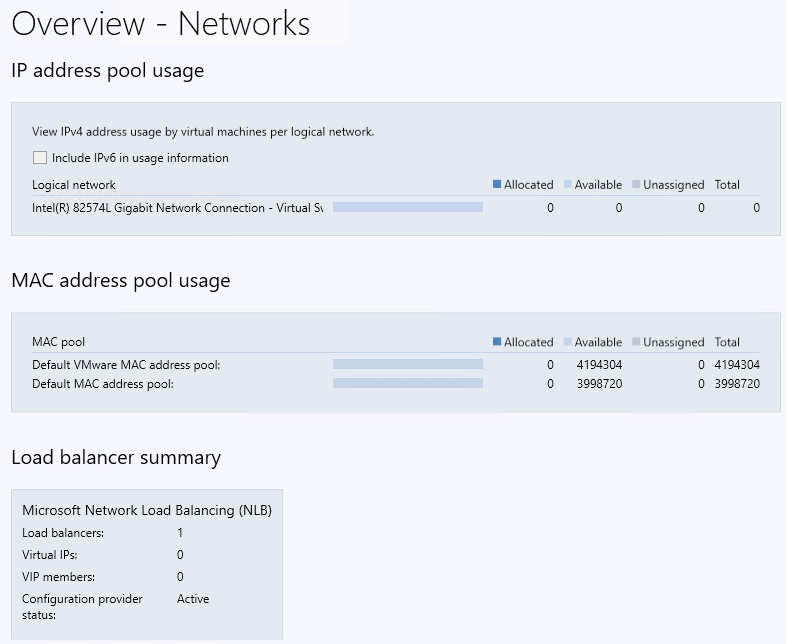

Configuring, monitoring, and managing Hyper-V networks is easily done with SCVMM. It allows administrators to easily see all network adapters on hosts, logical networks, virtual switches, MAC addresses, etc.

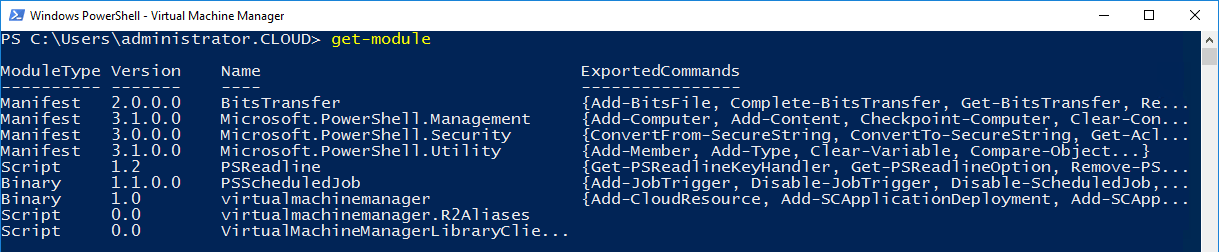

Launching PowerShell from Virtual Machine Manager yields a number of modules that allow programmatically interacting with SCVMM and a Hyper-V environment.

SCVMM allows a wealth of visibility and configurability from a Fabric standpoint. Take a look at the configuration options for Networking as an example. Administrators can configure:

- Logical Networks

- MAC Address Pools

- Load Balancers

- VIP Templates

- Logical Switches

- Port Profiles

- Port Classifications

- Network Service